“We are completely fine losing $9.7M a year thanks to poor quality data.” said no one, EVER. But yes, you read that number right. According to Gartner, businesses lose $9.7 million annually due to poor quality data on average. In other words, the high cost of bad data is large enough to potentially cripple a business.

In this article, we’ll dive into how data quality issues can steer companies in the wrong direction – and what your team can do to prevent this.

The cost of bad data goes well beyond your marketing team

Many marketers say all data looks the same, and it can be hard to distinguish between good and bad data. Considering that US marketers spend 11 billion dollars each year on data, then you can see this is a HUGE problem. Moreso considering 90% of them rely on data to deliver personalized experiences.

But this issue is facing everyone from the product organization as they focus on innovating to stay ahead of consumer trends, to underwriting for organizations that need to acquire and identify new applicants quickly to deliver loans and policies. Bad data can be ‘poison in the well’ for many data-driven organizations.

The most common data quality issues

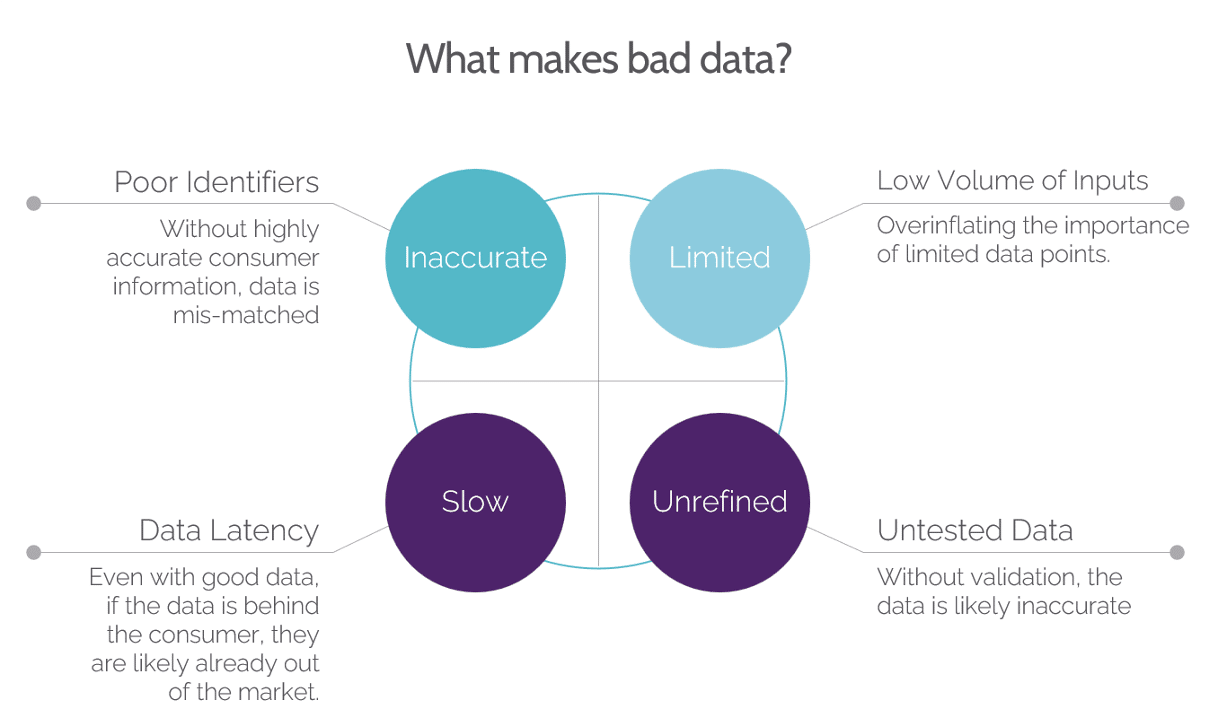

Inaccurate

This can seem overly obvious, but inaccurate data is invited into major corporations everyday – undetected.

Data can be inaccurate for a numbers of reasons:

- Latency: The data used to be correct, but has now become out of date. How important is it to target new movers versus a family that relocated 18 months ago? Very important for insurance companies that depend on timely messaging around key events. Concerns over data latency are especially critical for brands targeting audiences within specific lifestages like young families, new movers, or newly formed businesses.

- Low volume of inputs: Using a low volume of contributing sources is one lever data creators can use to keep costs down. However, that means instead of validating a conclusion against a number of sources, they are drawing a conclusion based on a smaller and less reliable volume.

As an example, if a consumer bought a hunting T-shirt once, they could be identified as a “hunting enthusiast” when really the purchase was an isolated event (a gift for someone else), and they have zero interest in hunting. If the data creator is drawing conclusions on the data from a number of sources, they would see that the consumer has never shown any other hunting related tendencies.

- Untested: Without continual testing, data creators can’t identify their accuracy gaps which leave them unsolved. This is where regular data quality assessments help them identify areas of lower accuracy to improve their sources or tactics.

Inaccurate data leads to poor decision-making – the very thing that most companies shopping for data are trying to avoid.

Duplicated data due to poor identifiers

Duplicated data in a dataset will most definitely skew the results of any analysis, driving misled conclusions and decision-making. If the external data your company acquires doesn’t come with solid consumer information, and accurate identifiers, it fails to match well with your internal data assets resulting in duplicate records. Those duplicate records turn into duplicate messages, causing waste and bad consumer experiences.

You can also get into compliance issues as some industries and regions are required to follow strict data privacy regulations. Hence, duplicated data may incur a breach of privacy rules and regulations.

And last but not least, having duplicate data will require more storage space, which can be costly.

Unstructured

Once data has been secured and is being brought within your walls, we face a new set of data quality issues. First is unstructured data which is not organized in a pre-defined manner, making it difficult to access, retrieve, and analyze for teams across the organization.

Most analytical tools and systems work with structured data, meaning they may not be able to handle unstructured information that lacks consistent formatting, making it hard to identify errors or inconsistencies.

Hard to access

This is quite self-explanatory! But it happens. Failing to provide ease of access and clarity around how to interpret your dataset can become a real problem.

The consequences of using dad data to support your marketing and business decisions

It can lead to targeting the wrong audience, sending the wrong message, drawing incorrect conclusions, and even causing a loss of trust amongst the teams working with this dataset.

Inaccurate data can blindside you and your teams, resulting in lost opportunities – such as not identifying the right target audience or a key trend in the market.

Now that we’ve gone through the most common data quality issues, let’s take a deeper look at the consequences these can lead to.

Confusion

You’re only as smart as the quality of your data. It may seem obvious, but bad data can lead to confusion across your organization due to inconsistency, incompleteness, and incompatibility. Not only that, you can also confuse the very people you’re hoping to engage by sending mismatched, duplicative, or irrelevant communications.

High customer acquisition costs

If a company is using bad data, it may be reaching out to individuals who are not interested in its products or services, or who do not fit its target demographic. This can result in a high cost per lead or customer acquisition, as the company is spending resources on ineffective marketing efforts, eating up valuable time and resources.

Additionally, bad data can also lead to poor customer segmentation and targeting.

Failure to deliver a great customer experience at scale

One of the MAIN concepts for efficient business intelligence is…know your customer. Data is a top tool to deliver exactly that. It’s the vehicle you use to fully, and deeply understand your audience.

As mentioned before, if for example, you are working through a dataset that has duplicates, you can also end up sending multiple emails with the same content to your audience. This can create frustration and confusion.

Nowadays, in an era where people value customer service more than ever – and do so in a very public way – it’s not worth putting your brand’s values and reputation on the line. All companies have raving fans, people just not interested, and haters, but the key here is to avoid that happening simply because your data was a mess.

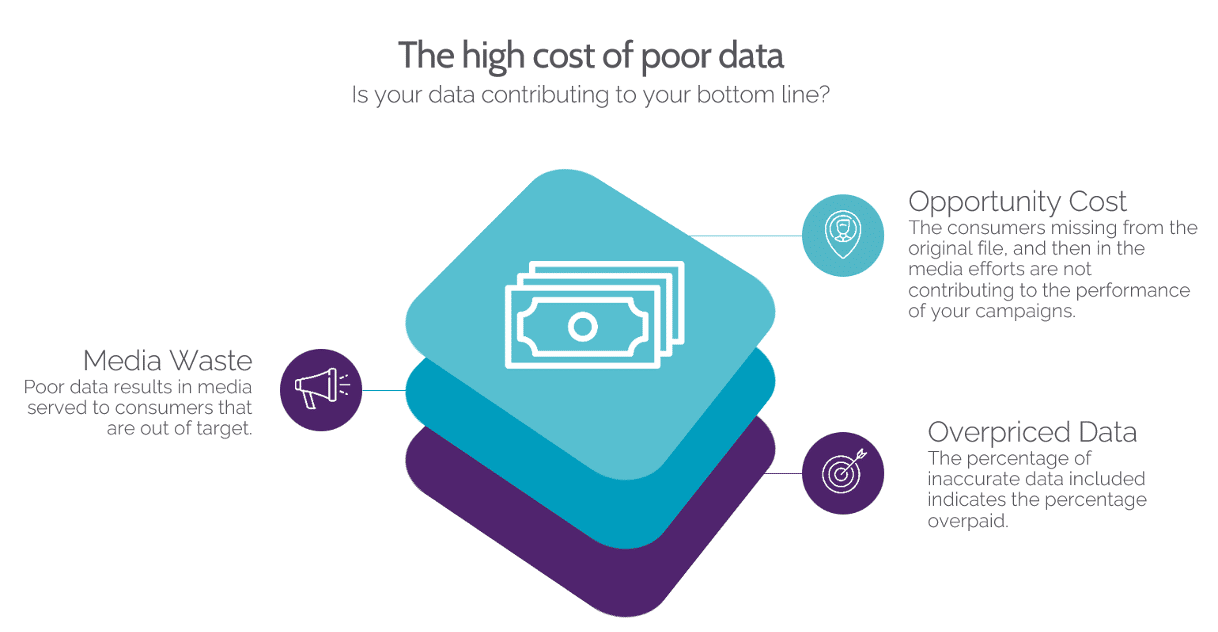

Media waste

Data quality issues will lead to poor segmentation, targeting, and a lack of understanding of where your consumers are in their buyer journey. The high cost of bad data-based media is not to be overlooked. Working with a dataset that fully understands your audience will help you talk to them at the right time, with the right words, and in the right place.

Consider this – many brands realize that their data file may include 10-15% inaccurate records at any given time. What they fail to consider is that now all the media costs associated with that 10-15% of records are now wasted. With the skyrocketing cost of high performance media, that failure alone can result in a loss of millions for large and mid-sized marketing teams.

Reactive instead of deliberate A/B testing

Testing is fundamental. Would you prefer to test by basing your conclusions on good data or poor data?

Playing around with duplicated, unstructured, incomplete, or inaccessible data will indeed lead to poor performance as it puts you in a disadvantaged position for calling the shots.

Overpriced data

There’s a reason why this article is about the high cost of bad data! It’s because once you identify the portions of data that are inaccurate, you need to figure out how much you overpaid for it – so it doesn’t happen again. Proper data selection from the get-go is the name of the game. If 20% of your data is found to be inaccurate or unreliable, you are overpaying for your data by at least 20%. Basically, what may seem like a ‘bargain’ is not.

Running a data quality assessment: what are the attributes of high quality data?

The golden standard for high quality data is how PREDICTABLE it is. That’s why brands look into datasets – to be able to predict the behavior of their targeted audiences.

Good data includes information, insights, and even marketing models that drive great results and a positive ROI. Here are the building blocks of predictive, high quality data:

Accuracy

Accuracy in marketing data means you are not left guessing! It means the data provider is leveraging multiple trustworthy and proprietary data sources to curate both accurate and current data.

Timeliness

How current the data you use can shift how to map out your strategy. Consumer behavior is constantly changing, and your data is either current – or it’s stale.

Granularity

Continuous, granular data can take on any value. As an example, many data creators create extremely large income brackets to segment consumers. While it may be accurate to say Record A has a household income of $101,000 per year, should they really be in the same bracket as Record B who has an income of $149,000 per year? Those two data points perform extremely differently when it comes time to create predictive models. Granular data prevents scores from clumping together in the final model, which often leads to better performance.

Applicability

The more broadly applicable a marketing dataset is, the more likely it is to have some data that is a direct hit for your strategic goals.

For example, insurance behavior or asset data is likely to help drive better results in insurance targeting. Automotive data is likely to help drive better results in automotive marketing. This may seem obvious, but ensuring that all your data options can be leveraged is key.

Uniqueness

Your data modeling should be predictive and proprietary. This means that your particular dataset has the extra ingredient that takes it to the next level.

If you go beyond utilizing only basic information – such as age and gender – you can create a high performing model that helps you launch better marketing campaigns. An efficient approach to this can be to rebuild existing models with a new data source and a different perspective and universe. The result is improved performance.

Coverage

Accurate data alone won’t bring your brand to new heights. High quality data paired with ample coverage will help you reach much more of your target audience. Consider this – you may have the most accurate data in the world, but if the coverage only includes 15% of the population, it’s not a scalable solution to help you achieve growth.

Integrity

Integrity, especially when it comes to identifiers, ensures data relationships are accurate. For example, correctly linking a patient to others within their household better supports accurate SDOH data.

Consistency

Once your external data is secured, simple rules of the road are required to help everyone make the most of it. Consistency requires following established patterns and uniformity rules, such as using “Zip Code” or “ZC” or “PC”.

Consistency also helps properly identify your potential customers. How can you attract them if you have no way to find them? For example, say you are a company trying to link up the data around small and micro businesses, then consistent and comprehensive information will become a must to target the silent movers of the economy that can be hard to track.

Finding the why: avoiding the high cost of bad data

At the end of the day, the high cost of bad data goes well beyond duplicated or inaccurate information. It’s about NOT understanding your audience.

Your audience is the heart and soul of your business, shouldn’t they be your company’s TOP priority?

Much of the data available to marketers today answers the question of who people are, like their age and gender.

However, oftentimes data fails to answer the why of an action, a purchase, or a behavior. That’s where our team of industry veterans, data scientists, and cognitive psychologists comes in: What motivates your target audience to make the purchases they make? Let us find out!

Getting to the heart of WHY combined with predictive modeling and trustworthy datasets is the key to consistently driving up performance from the ground up. At AnalyticsIQ, we help you make accurate observations based on comprehensive data. Connect with your audience at a deeper level, and stop wasting valuable resources!