This is a guest post contributed by privacy-enhancing technology innovator Karlsgate.

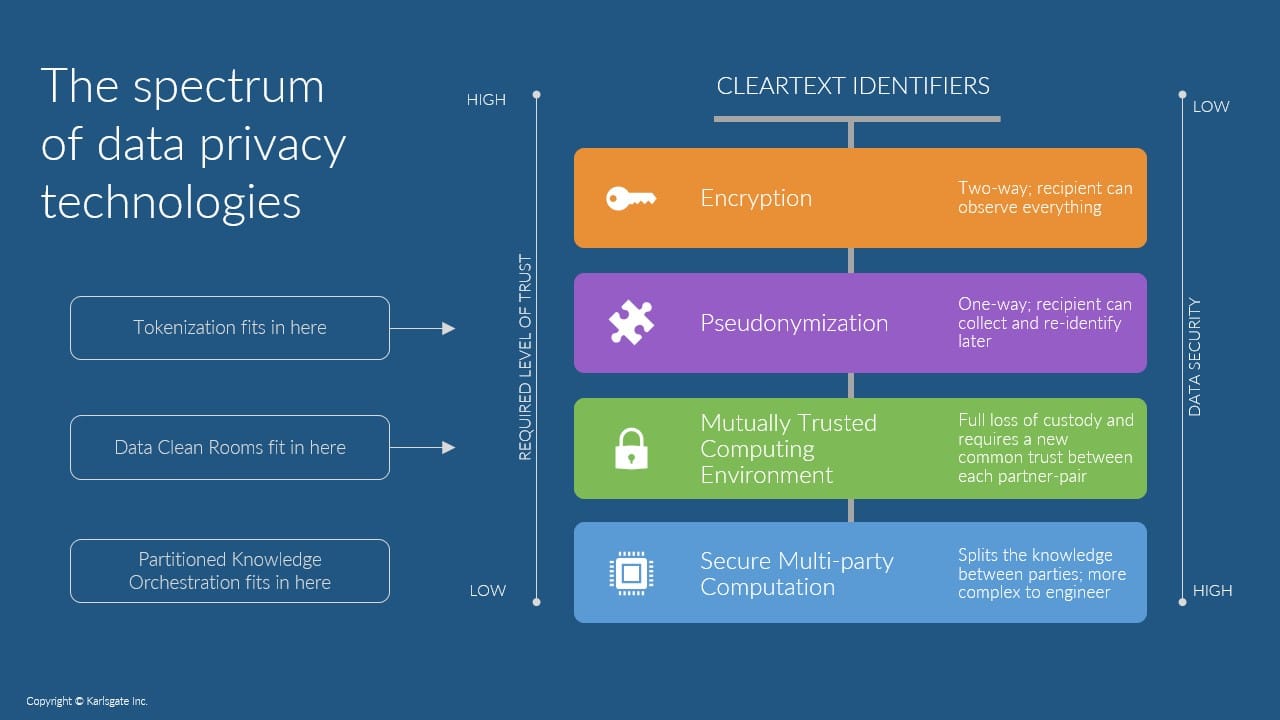

Changing the way privacy is managed begins with understanding the different ways in which data has historically been protected. From encryption and hashing to the more recent introduction of data clean rooms, the many options for securing data all provide protection in some capacity. Yet none of these methods alone fully protects data without processing complications or limiting functionality. So, what’s the best way?

The reality is that it depends on your goals. So, let’s narrow our focus, for today, on the goal of protecting consumer identities while solving the “linkage problem.” The critical linkage problem can be defined as:

Two independent entities (public or private) are each managing a dataset about individuals. The understanding of each individual’s identity is achieved using various identifiers such as name, postal address, email, and/or social security number. However, these components of personal data are sensitive and are tied to personal privacy rights, regulatory restrictions, and/or ethical handling concerns.

The problem lies in how to enable the 2 independent entities to share the understanding of the individuals in common between the 2 datasets without sharing any personal data and without inadvertently allowing reidentification of those individuals not in common (i.e., outside of the desired intersection).

Now that we have a focus for our discussion, let’s evaluate the evolution of protection methods.

Evolving Data Protection to Keep up with Modern Needs

There was once a time, before privacy concerns and regulations were prevalent, in which sending clear text was the norm – and you may be surprised, but it does still happen today. Rising concern then led to the introduction of encryption, a method which protects data well while in transit – but requires that you give custody of a copy of your data and supply the decryption key to your partner. This makes it fully re-identifiable once the recipient decrypts it and leaves unnecessary, residual data in your partner’s environment; the identities and attributes of individuals that were not common to both you and your partner’s file.

Hashing then came into play, scrambling data in a way that’s very difficult to reverse. However, this still leaves data changing custody, which can lead to future re-identification attempts against an identity graph. Also, hashing is still considered identifiable data and therefore isn’t GDPR-compliant, meaning in today’s data ecosystem, it’s still not enough.

More recent innovations in privacy-enhancing technology include federated learning, differential privacy, and fully homomorphic encryption. While each is powerful for use in analysis, modeling, and data obfuscation, none of these methods adequately addresses the data linkage problem – a cause for many challenges when it comes to data collaboration. What we’re still missing is the ability to adequately protect PII, while also having a meaningful impact on sharing insights on matched identities.

Even data clean rooms, which have become more common today, require that both partners agree on which clean room solution to use and then allow an additional third party to gain custody over all datasets used for matching. It’s a full-sharing event with consent and security obligations, subjecting PII to a level of risk.

As we usher in the “Protected Data Age,” a new era in which harnessing the power of data no longer means sacrificing consumers’ privacy, it’s time to acknowledge the current limitations with legacy data protection methods and embrace a better way to safely share data.

How a Zero-Trust Approach to Security Can Improve Your Data Collaboration

In order to usher in this new age, we first need to acknowledge the reality of the world today. We have seen a significant rise in hacker activity in recent years. In 2022 alone, the U.S. Department of Health and Human Services reported nearly 600 healthcare data breaches affecting more than 40 million individuals. And this is not a trend specific to healthcare whatsoever. A recent study revealed that with current data-protection methods, cybercriminals can still penetrate 93% of company networks. In order to respond to this ever-increasing threat, we need to assume a breach will be attempted, and therefore share data in a way that eliminates the associated risk altogether. This is known as a zero-trust approach.

The philosophy behind zero-trust architecture is based on a very real understanding that nearly every digital interaction is risky by nature. Zero-trust frameworks can be applied to nearly every aspect of an organization’s cybersecurity approach. It means that devices and networks are validated, users verified, and access to data and files is strictly controlled, limited to who needs access and when. It also means setting up data sharing in a way that keeps networks safe and prevents the need for identifiable data to leave the secure environment.

Data sharing often means the data is only as secure as the organizations with which we share; zero trust means we can share without exposing our data to someone else’s risks. With many of the existing methods for sharing data, organizations lose control of their data and re-identification is possible. It’s not the strongest protection against data loss or leakage.

In contrast, emerging methods of safeguarding PII, along with new privacy enhancing-technologies, combine a robust process of securing data. One such innovation leverages partitioned knowledge orchestration involving two partners that want to share insights and a third-party facilitator that never accesses any identifiable or usable data. This approach enables partners to match and identify overlaps of their data without ever losing custody of any of their data. It also enables sharing of insights but only non-identifiable attributes and only on matched records. This creates a zero-trust framework for data sharing and blocks re-identification. Layering in best practices associated with salted hashing and encryption makes it so that neither sharing partner nor the blind third-party facilitator can make use of any identifying elements that they did not already have. Further, because data partners only learn new insights about individuals for whom they already had data, there’s no residual data left behind.

A matching process like this, without disclosure, eliminates the largest and otherwise unavoidable risk vector associated with sharing data: the dissemination of identities. This means that a business risk profile is no longer an aggregation of all of the risk profiles for each and every data partner with whom they work. In a world where there is a tidal wave of demand for data from novel, more extensive, and more comprehensive sources, while, at the same time, hackers are continuing to escalate their activity, it’s essential that we continue to be looking for innovative approaches for connecting data safely and securely.

Technology is changing; how we deal with big data must change with it.